How to Evaluate Whether the Inspection Accuracy of Vision Meets Requirements

Dear friends, those engaged in manufacturing and automation, gather around! Today, let's talk about a crucial topic: how to determine whether the visual accuracy meets the standard? Those involved in measurement know that accuracy is of utmost importance! When we use a vision system to measure dimensions, some degree of variation is inevitable, and the difference lies in the number of decimal places.

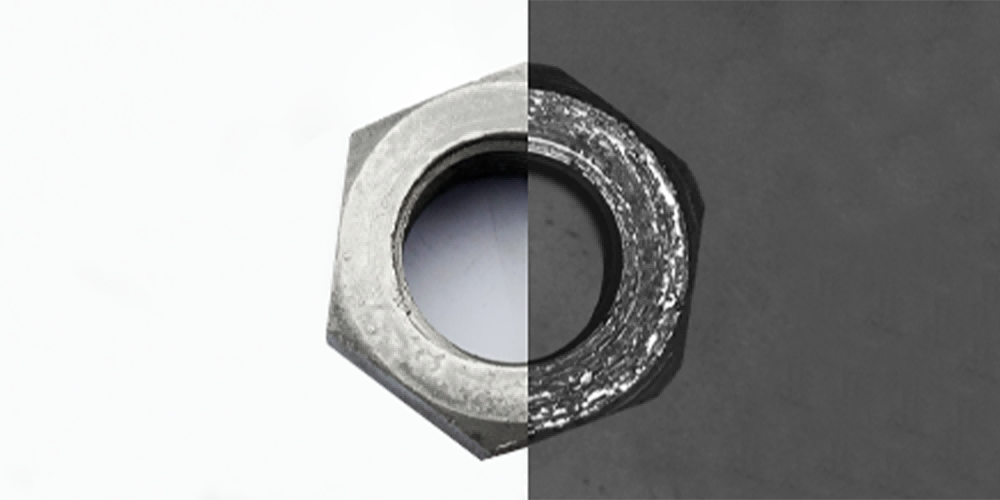

For example, when measuring the diameter of a ring, its tolerance is 0.1 mm. According to the standard, the accuracy of the measuring tool should reach 1/10 of the tolerance, that is, 0.01 mm. But what exactly is this 0.01 mm accuracy? There is no unified conclusion in the industry. Some people think it is repeatability accuracy, while others say it is absolute accuracy within the field of view.

Based on our experience, we might as well define it as the accuracy of multiple incoming inspection of the same product. Take the commonly seen optical sorting machine in the market as an example. How do we determine its inspection accuracy for a product? Place the product repeatedly 30 times and after guiding, the difference between the maximum and minimum values obtained from the inspection is its inspection accuracy.

However, many manufacturers regard the dimensional variation of the product when it is stationary as the accuracy, which is very inaccurate! We must take into account the runout error of the glass disk, the guiding error, and so on! But unfortunately, it's already quite remarkable for optical equipment to achieve an accuracy of 3 microns. This means that from a rigorous perspective, we can only measure dimensions with a tolerance of 0.03 mm.

But in actual production, there are many cases where dimensions of 0.01 mm need to be measured. Therefore, many customers can also accept measuring tools with an accuracy of 1/3 or 1/5 of 0.01 mm. After all, even when using a micrometer for measurement, there will inevitably be an error of a few microns.

Interpretation: (Inspection Accuracy) refers to the consistency of the output results with the Ground Truth when the machine vision system, inspection equipment or algorithm identifies, classifies or measures the target object. It is the core index to measure the reliability and effectiveness of the detection system, and is widely used in industrial quality inspection, medical imaging, automatic driving, security monitoring and other fields.